In 2024, can GPU prices be reduced?

tech

As 2023 fades into the past, when we look back at the most industry-focused chips of the year, it's likely that the majority would cast their votes towards GPUs. Throughout 2023, we constantly heard about "GPU shortages," "NVIDIA's surge," and "Jensen Huang sharing the secrets to success."

Undoubtedly, the wave of generative AI in 2023 was boundless, with high-tech companies worldwide rushing into an AI arms race. This led to a crunch in computing power and a global scramble for GPUs.

01

The World's Hottest GPUs

In 2023, there was an endless stream of news about large-scale AI models. Companies like OpenAI, Baidu, Google, and Tencent were all building their own massive models. We won't delve into the specifics of these diverse models, but what's clear is that the construction of these models requires GPUs, and the most in-demand GPUs in 2023 were undoubtedly the A100/H100.

The A100 GPU was at the forefront. OpenAI utilized 3,617 HGX A100 servers, containing nearly 30,000 NVIDIA GPUs. Domestic cloud computing experts believe that the minimum threshold for computing power to handle large AI models is 10,000 NVIDIA A100 chips.

Advertisement

Research from TrendForce indicates that based on the computing power of the A100, the GPT-3.5 large model requires as many as 20,000 GPUs, and after commercialization, it might need over 30,000.

The H100 is also a sought-after target in the industry. The H100, which went into mass production in September of the previous year, is manufactured by TSMC using their 4N process technology. Compared to its predecessor, the A100, the H100 offers a 3.5x increase in inference speed and a 2.3x increase in training speed per card; when using a server cluster for computation, the training speed can be improved by up to 9 times, reducing a week's workload to just 20 hours.

Considering the overall system cost, the H100 generates 4 to 5 times more performance per dollar than the A100. Although the H100's price per card is higher compared to the A100, its increased efficiency in training and inference has made it the most cost-effective product.

Because of this, tech giants are all scrambling to purchase NVIDIA H100 GPUs, or more precisely, NVIDIA's 8-GPU HGX H100 SXM servers.NVIDIA has become the most watched "seller of shovels." Targeting the Chinese market, it has also launched a fully export-compliant reduced-dimensional version of the H100 chip, following the A800 operation model (a reduced-dimensional version of the A100 chip).

02

Eager Buyers

"GPU is the new Bitcoin of the era." OpenAI's Chief Scientist Ilya Sutskever wrote this statement on his personal X account. Against the backdrop of a surge in computing power, NVIDIA's GPUs have become "hard currency."

Exaggeratedly, some overseas startups have even begun to use GPUs for collateral financing, with a Silicon Valley startup securing $2.3 billion in debt financing using the H100.

There are three types of companies purchasing H100 and A100. The first type includes companies that need more than 1,000 units, such as startups training Large Language Models (LLMs) like OpenAI and Anthropic; cloud service providers like Google Cloud, AWS, and Tencent Cloud; and other large companies like Tesla. The second type consists of companies that need more than 100 units, which are startups that perform extensive fine-tuning of open-source models. The third type, needing 10 units, includes most startups and open-source forces that are keen on using the outputs of large models to fine-tune smaller ones.

How many GPUs do these buyers need? OpenAI may require 50,000, Inflection needs 22,000, Meta needs 25,000, and large cloud vendors (Azure, Google Cloud, AWS, Oracle), each may need 30,000. Lambda and CoreWeave, along with other private clouds, may need a total of 100,000, while Anthropic, Helsing, Mistral, and Character, each may require 10,000.

Just these few companies alone already need 432,000 H100s. If calculated at approximately $35,000 per unit, the value of the GPUs is about $15 billion. And this does not even include domestic companies like ByteDance, Baidu, and Tencent.

In the competition for AI large models, the countries that are on the list: China, the United States, Saudi Arabia, and the United Arab Emirates, are all esteemed guests of NVIDIA.

Saudi Arabia has purchased at least 3,000 NVIDIA H100 chips through the public research institution King Abdullah University of Science and Technology (Kaust). These chips are scheduled to be delivered in full by the end of 2023, with a total value of about $120 million, to be used for training AI large models.The UAE has also obtained the rights to use thousands of Nvidia chips and has launched its own open-source large language model "Falcon40B", which was trained using 384 A100 chips.

03

GPU Shipments

The shipment volume of Nvidia H100 is also closely watched. Research firm Omdia disclosed that Nvidia's H100 shipments in the second quarter of 2023 amounted to 900 tons. Based on the weight of a single H100 GPU being approximately 3 kilograms, Nvidia sold about 300,000 H100 units in Q2.

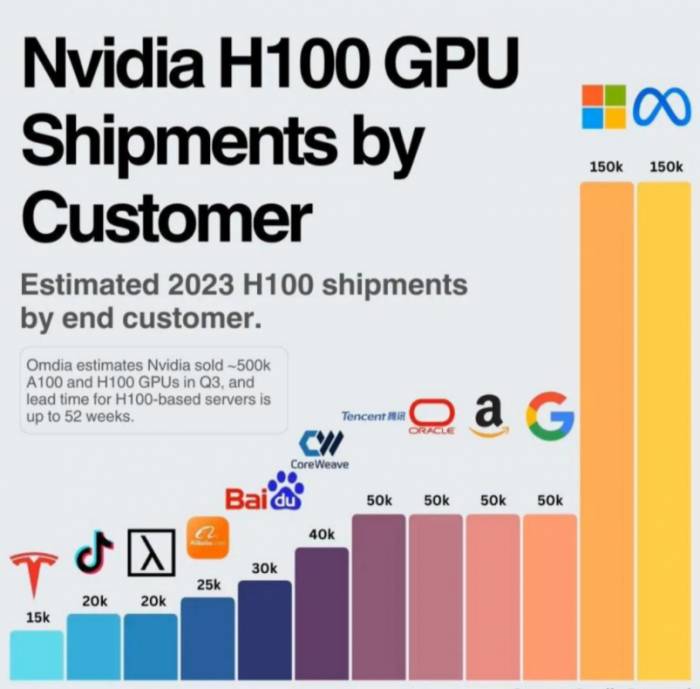

By the third quarter, Nvidia had sold approximately 500,000 H100 and A100 GPUs. The number of GPUs available to each company was limited; Meta and Microsoft each purchased 150,000 H100 GPUs, while Google, Amazon, Oracle, and Tencent each procured 50,000 units.

Such a massive demand for GPUs has led to servers based on the H100 requiring 36 to 52 weeks for delivery. According to Nvidia's official statement, all GPU chips before the first quarter of 2024 have already been sold out.

Industry estimates suggest that Nvidia's shipments next year will reach between 1.5 million and 2 million units.

04

In 2024, Can GPU Prices Decrease?

Whether GPU prices can decrease will depend on the supply and demand situation next year. The chart above, provided by GPUUtils, lists the technology companies that have the most direct impact on the supply and demand changes in the GPU market, including OpenAI, which developed Chat-GPT, TSMC, Microsoft, Meta, and AI startup Inflection, which raised $1.3 billion in funding in just one year.Buyers

Some people do not want to buy. Google and Meta both think Nvidia's GPUs are too expensive.

Meta has recently announced the construction of its own DLRM inference chip, which has been widely deployed. Meta frankly admits that its upcoming AI chip cannot directly replace Nvidia's chips, but the self-developed chip can reduce costs.

Even in the two-hour keynote at Google I/O, Google has been praising Nvidia's H100 GPU chip. This does not prevent Google from "looking for a better horse while riding a donkey"; Google's cloud servers have already started using its own TPU.

The Google TPU v5e was released in August 2023, becoming a powerful force in the field of AI hardware, specifically tailored for large language models and generative AI. Compared to its predecessor, the training performance per dollar of TPUv5e has significantly improved by 2 times, and the inference performance per dollar has significantly improved by 2.5 times, which can greatly save costs. Its groundbreaking multi-chip architecture can seamlessly connect tens of thousands of chips, breaking through previous limitations and paving the way for processing massive AI tasks.

Some people cannot buy. China is Nvidia's third-largest market, accounting for more than one-fifth of its revenue. With the U.S. government announcing further bans on the sale of Nvidia H800 and A800 chips to China, the unsold GPUs will inevitably have a negative impact on Nvidia.

In addition, AI may face a downturn next year. The 2023 AI craze, in addition to the large models shocking the industry, also has no shortage of hype. Undoubtedly, ChatGPT is the world's top flow in 2023. Because of it, the traffic of the OpenAI website exceeded 1.8 billion in April, entering the top 20 global traffic rankings. However, data released by the web analytics company Similarweb shows that after a half-year surge, ChatGPT's visits experienced a negative growth for the first time, with a month-on-month decline of 9.7% in June.

After more than 200 days of entrepreneurship in large models, the mentality of explorers has shifted from idealistic excitement to practical implementation. The maintenance of large models depends on a large number of high-performance chips, and the cost of building and maintaining generative AI tools alone is very high. This is fine for large companies, but for many organizations and creators, it is an unaffordable expense.

For productive AI, 2024 will once again face the test of the public. Industry analysts believe that the hype around generative AI in 2023 is very large, and AI has been overhyped because the related technologies need to overcome many obstacles before they can be brought to the market.

After the ebb, how much demand for high-performance GPUs can remain?Seller

Let's first take a look at NVIDIA, the largest seller. Focus on two aspects: whether it can supply enough GPUs and whether there will be new products next year to stimulate market demand.

In terms of supply, as is well-known, NVIDIA only collaborates with TSMC to manufacture the H100. TSMC has a total of four production nodes dedicated to 5nm chip capacity: N5, N5P, N4, and N4P. The H100 is only produced at the 4N node, which is an enhanced node for 5nm chips, and NVIDIA must also share this node's capacity with Apple, AMD, and Qualcomm. As for the A100 graphics card, it is made on TSMC's N7 production line. In the short term, Samsung and Intel are unable to alleviate NVIDIA's supply tension due to process technology issues. Therefore, it is expected that the market for NVIDIA's GPU supply will remain tight next year.

Regarding new products, at the end of November 2023, NVIDIA announced the successor to the H100—the H200. It claims that the H200's inference speed is nearly twice as fast as the H100 GPU when processing LLMs like Llama2. Starting in 2024, Amazon Web Services, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure will be the first cloud service providers to deploy instances based on the H200. Although the price of the H200 has not been announced, it will certainly be more expensive than the current price of the H100, which ranges from $25,000 to $40,000 per unit.

From both aspects, there is still considerable uncertainty in the supply and demand of NVIDIA's GPUs. However, in the seller's market, more manufacturers will join.

Another major seller of GPUs next year will be AMD, which has regained its focus.

According to the latest financial report, AMD expects its GPU revenue to reach $400 million in the fourth quarter and to exceed $1 billion by the end of 2024. Its CEO, Dr. Lisa Su, is very optimistic about next year: "MI300 will become the fastest product to reach $1 billion in sales since 2020."

In November 2023, AMD officially released the product that competes with the H100—the MI300. According to AMD's demonstration, a server composed of eight MI300X units can achieve up to 1.6 times the performance in large model inference compared to a similarly scaled H100. For AMD, this kind of direct competition is quite rare.

Interestingly, Meta, Microsoft, and OpenAI have expressed at AMD's investor events that they will use AMD's latest AI chip, Instinct MI300X, in the future.

However, NVIDIA has also taken a tough stance against AMD's competition, officially publishing a blog to refute AMD's unobjective evaluation. NVIDIA stated that if the H100 GPU were to use optimized software for the correct benchmark tests, its performance would far exceed that of the MI300X.Since the MI300 has not yet been officially applied, it is difficult to determine the "lawsuit" between us and AMD and NVIDIA, but regardless, AMD has reached the starting line of high-performance GPUs.

In summary, the GPU in 2024 is still full of variables. After the AI large model cools down, after buyers do not want to be the bigheads, and after more and more sellers, will the GPU still maintain a high price? I believe everyone has their own answer in their hearts. From the perspective of the market, no matter how advanced the technology is now, it will eventually become more and more popular. It depends on how the GPU players attack the battlefield in 2024.